Latency Blog Series Part 2- The Three Main Causes of Latency

What causes Latency? Let’s learn where ‘Lag’ comes from, and why it’s such a headache

In our first blog, we looked at what ‘Latency’ is, and why high latency can be such a big issue for application performance.

But if the difference between low latency and high latency is so significant, then why is it so hard to give everyone low latency connectivity?

To explore this, we’re going to look through the three main causes of latency, from what causes them to exist, why they can be difficult to navigate, and ultimately how the latency they generate can impact user experiences.

So, what causes latency?

Latency Cause #1 –Distance from the Host Server

Let’s imagine that we have a group of friends, distributed randomly across the country, who all want to meet up at a randomly selected bar from any point in the same country to catch up. However, instead of agreeing to meet at a time, they all agree to leave their respective locations at the same time.

Naturally, each of the friends will arrive at the chosen bar at different times; the friends furthest away will take the longest time to arrive, whilst the closest friends will take the shortest amount of time to arrive. The close friends might have even finished their first few drinks by the time the farthest friend arrives!

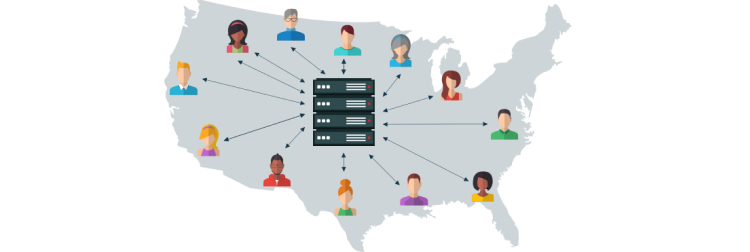

This is the exact same scenarios that applications, websites, games, and services encounter when trying to support online users. The servers will be hosting users from all across the world, and naturally some users will be further from the server than others. As a consequence, their requests will be completed slower, thus higher latency.

Geographical distance is the biggest cause of latency differences, and it’s an extremely difficult factor to accommodate as you can’t change most of the variables involved.

However, you need to find a way to accommodate all your users- including those in remote locations- if you want your product to thrive, but finding a solution on your own can be far from easy, as it requires you to have loads of servers spread all across the globe to reduce the distance between your services and any of your clients.

And this is only the first cause of latency that you’ll need to consider!

Latency Cause #2 – Traffic loads on the network

The second cause of latency doesn’t stem from location, but from traffic; traffic meaning the sheer number of users, and applications, that are utilising networks at any one time.

Between streaming, gaming, mobile applications, and the Internet of Things (IoT), internet traffic is being utilised in previously unprecedented volumes. Because of this, at peak times, networks can become overwhelmed, bandwidth capacity can be quickly exceeded, and this leads to increased levels of latency.

When there isn’t much traffic, most networks will provide a quick, speedy connection that completes user requests quickly, allowing applications to complete business functions or satisfy users with no impairments whatsoever.

However, if lots of people are using the internet at any one time- during peak times, for instance, when lots of employees attempt to access a service at one time, or when users are using streaming services, face timing, or playing online games– then all of this network traffic will resulting in more time needed to complete requests, and thus, higher latency.

Naturally, users will be frustrated if their connections to applications or services become slow, if they experience gameplay issues, or if they get disconnected in high-load periods where they normally wouldn’t, and this can be extremely negative on a product’s community, and chances of success.

These problems can be particularly difficult to avoid, as most users will want to use networks at ‘peak times’, so this surge of network traffic will be unavoidable!

Solving this will all come down to how your server provider looks to accommodate this rush of traffic to ensure that the end user isn’t negatively affected.

Latency Cause #3 – Overloaded Servers

The final cause of latency is tied to the servers, which process all the requests being sent by all the requests .

Whilst being landmark technology, servers have a maximum capacity, and they will begin to stutter when they are overwhelmed with data requests. This can happen to dedicated servers under periods of extreme traffic- for instance, if a business has a flash sale around Black Friday, if your game has a boosted XP weekend, or your app experiences a surge in popularity following an influencer promotion. However, it’s much more likely to happen if you are using a shared server or a limited cloud package.

When using shared servers, the traffic of other businesses could have a negative impact on your server’s performance. Put plainly, if another company on your server begins to experience high traffic, that strain on the server will be passed onto your traffic, ruining the experience of your users, despite your user numbers being at a normal level, and no other variables negatively impacting user connection.

In normal circumstances, multiple clients can share the same server with no implications, and the clients get the bonus of paying less, as they’re not using the whole server.

However, things can change when another company has unexpectedly high traffic whilst on a shared server. All that excess traffic from the second company can put a strain on the server, and subsequently result in much higher latency as the server struggles to keep up with all the requests.

Naturally, this can be a difficult problem to avoid, as it will only happen in unusual circumstances that provoke a sudden rush of traffic, so you won’t experience it every single day. However, if this sudden strain on your server occurs when you’re also trying to run key marketing campaigns for your business, then the additional latency from server strain can have a big impact on your users experience, and your bottom line as a result!

How do Ingenuity Cloud Services Overcome Latency?

So now we know where latency comes from- but how can we stop high latency from impacting the user experience?

How can we

- Make it so that users who are in remote locations can still have fast and speedy connections?

- Ensure that high network traffic in peak-usage times won’t impact our business applications or user’s experience?

- Prevent performance drops due to ‘noisy neighbours’ on a shared server?

To get all of the answers, check our third and final blog post of our latency series, How Ingenuity Cloud Services Prevents High Latency!